GitHub has long supported self-hosted runners. These allow you to own the environment running your CI/CD pipelines. The challenge that most teams have is that they need a solution that provides ephemeral runners. It also needs to dynamically scale up and down based on demand. This is where the Actions Runner Controller comes in. It is an open-source Kubernetes operator that allows you to orchestrate and scale your self-hosted runners.

There are two flavors — the original community-supported operator and the new GitHub-supported operator. GitHub’s new operator is the recommended solution, providing a streamlined experience using “runner scale sets”. It consists of two parts: the gha-runner-scale-set-controller and the gha-runner-scale-set. You can read more about the

design of the system, but the short version is that the controller manages the scale sets, and the scale sets create the ephemeral runners.

One challenge with the GitHub-supported operator is that it does not officially support Windows runners. This is a problem for many organizations that have a mix of Linux and Windows workloads. Fortunately, there is a way use Windows runners with the GitHub-supported operator. This post will walk you through the steps to get it working on Azure Kubernetes Service (AKS), but the approach applies to any Kubernetes cluster.

The runner image

First, we need a Windows container image for the runner. GitHub documents the requirements for runner container images, so we just need to build a compatible base image. THis means:

- The runner binary is in

/home/runner(c:\home\runneron Windows) - The runner binary is launched using

/home/runner/run.sh(c:\home\runner\run.cmd) - To support Kubernetes mode, the runner container hooks must be placed in

/home/runner/k8s(c:\home\runner\k8s) - There’s also a general requirement for all self-hosted runners. The image should have the latest runner version (it will fail if it gets too far behind).

I’ve published a base image that meets these requirements on GitHub ( https://github.com/kenmuse/arc-windows-runner). The image is not being regularly built, so you’ll want to fork the repository and update the GitHub Actions workflow to include a scheduled trigger for nightly builds:

1on:

2 schedule:

3 - cron: "0 1 * * *"The image contains Windows Server Core 2022 (LTSC), the latest version of the runner, the current version of Git (installed using choco), and the Kubernetes hooks. Feel free to extend it to include any other tools you need.

The Azure environment

Setting up an AKS cluster on Azure is straightforward. Microsoft provides a walkthrough using the portal and a tutorial using the CLI.

During the setup, you’ll need to ensure the following:

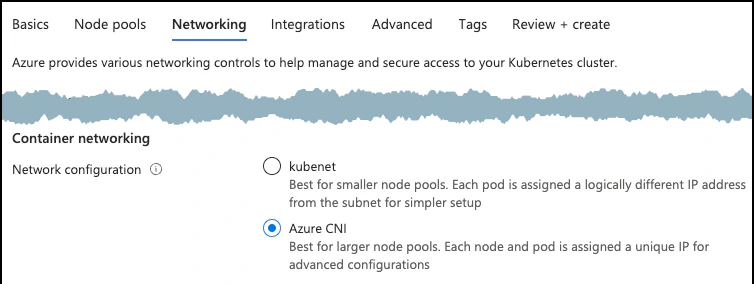

The cluster uses Azure CNI networking. This is required to support Windows nodes. In the portal, the network configuration is available under the Networking tab. When using the CLI, you’ll need to specify

--network-plugin azure.

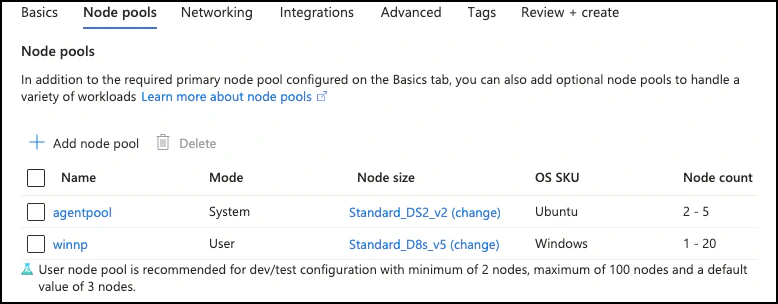

You must create a secondary node pool with Windows nodes. The primary system pool will always contain Linux nodes.

The Windows node pool must be tainted to prevent Linux workloads from being scheduled on them. This can be done in the portal at creation time or using the CLI (az aks nodepool) with

--node-taints 'kubernetes.io/os=windows:NoSchedule'.

While not required, I strongly recommend adding a NAT Gateway to your AKS subnet to avoid SNAT port exhaustion. While this is not required when dealing with a small sets of infrequent runners, it will often be required at scale.

Required tools

Unless you’re using Azure Cloud Shell, you’ll need to ensure you have three tools:

- The Azure CLI ( instructions)

kubectl( instructions). You can also install this usingaz aks install-cli.helm( instructions)

You can run all of this in a container as well. For a basic dev container, you can use this Dockerfile:

1FROM mcr.microsoft.com/vscode/devcontainers/base:jammy

2ARG TARGETARCH

3RUN curl -sL https://aka.ms/InstallAzureCLIDeb | sudo bash

4RUN curl -LO "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/${TARGETARCH}/kubectl"

5RUN sudo install -o root -g root -m 0755 kubectl /usr/local/bin/kubectl

6RUN curl https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3 | bashConnecting

Unless you’re working in Azure Cloud Shell, you’ll need to connect to the cluster by downloading the configuration from Azure.

- Use

az loginto do an interactive sign in az account set --subscription <subscriptionId>to select the subscription with the AKS instanceaz aks get-credentials --resource-group <group> --name <clusterName>to download the configuration and create a context forkubectl.

Installing the controller

Because the Windows node pool is tainted, only workloads that can tolerate that taint will be scheduled on that pool. This is important, because we want to ensure that the ARC controller is only scheduled on Linux nodes. With the taint in place, the controller can be installed as documented.

Creating the secret

I recommend creating a Kubernetes secret to enable the operator to authenticate with GitHub. A GitHub App is preferred over a personal access token. The steps are covered here.

For this walkthrough, I’ll assume we’re using a secret named gha-runner-secret (instead of pre-defined-secret).

Installing the runner scale set

GitHub provides

the steps for installing the scale set, but the steps assume that you’re running on a Linux cluster. The chart for the scale set actually requires Linux, but the dynamically created runners must be scheduled on the Windows pool. To do this, we’ll modify the command slightly and use a values.yml file to override the default values.

To properly schedule and run the Windows containers, we need three things in the template for the runner container:

- Configure the container’s command to be

cmd.exe /c \home\runner\run.cmd. - Add a toleration for the runner container to allow it to be scheduled on the Windows nodes.

- Add an affinity to ensure that the runner container is only scheduled on Windows nodes.

- Manually specify the name and namespace for the

controllerServiceAccountto ensure it is properly discovered.

A starting point for the values.yml would look like this:

1controllerServiceAccount:

2 name: arc-gha-rs-controller

3 namespace: arc-systems

4template:

5 spec:

6 containers:

7 - name: runner

8 image: ghcr.io/kenmuse/arc-windows-runner:latest

9 command: ["cmd.exe", "/c", "\\home\\runner\\run.cmd"]

10 tolerations:

11 - key: kubernetes.io/os

12 operator: Equal

13 value: windows

14 effect: NoSchedule

15 affinity:

16 nodeAffinity:

17 requiredDuringSchedulingIgnoredDuringExecution:

18 nodeSelectorTerms:

19 - matchExpressions:

20 - key: kubernetes.io/os

21 operator: In

22 values:

23 - windows

24githubConfigUrl: https://github.com/MYORG

25githubConfigSecret: gha-runner-secretYou’ll want to update the contents to use your Windows container image, the correct controllerServiceAccount details, and a githubConfigUrl that points to your organization.

With this values.yml, you can now deploy the scale set:

1helm install "arc-runner-set" -f values.yml --namespace "arc-runners" --create-namespace \

2oci://ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-setThe runners should now be properly scheduled onto the Windows nodes. You can verify this by

configuring a workflow that uses the runners. The workflow file should contain a runs-on property that specifies the runner set:

1jobs:

2 job_name:

3 runs-on: arc-runner-setWhat’s the catch?

Windows containers have a number of challenges with them. First, they are generally slower to start than a similar Linux container (and require more resources). This can also require larger VMs for their nodes to ensure adequate resources are available. The images are larger than Linux images (2.76 GB for Server Core), so they require more system resources. Additionally, Windows containers are not as supported in the Kubernetes ecosystem as Linux containers. This means that you may encounter issues with their behaviors. For example, when under pressure to scale up rapidly, you may encounter lock contention in the Azure CNI plugin. This can cause the pod to enter a retry sequence, delaying the container’s start.

Microsoft provides some recommendations for performance-tuning Windows containers. In addition, the Kubernetes documentation recommends always reserving at least 2GiB of memory for the operating system to prevent over-provisioning. This is a common Windows practice, but even more important for ensuring stable containers. It’s worth mentioning that AKS reserves this memory automatically.

These challenges are part of why I recommend Linux runners whenever possible, especially when using Kubernetes. While you can make Windows work with ARC, running in a full VM will generally provide greater reliability and security. In fact, it make senses to consider GitHub-hosted runners to have a scalable, ephemeral environment for Windows workloads. It also has the benefit of being fully supported by GitHub.

Conclusion

Kubernetes is highly configurable and makes hybrid environments with Linux and Windows containers possible. Since Actions Runner Controller is open source, it makes it easier to understand how it operates and customize it to our needs. This includes enabling Windows runners, even if it isn’t officially supported.